Introduction to AI

XForms and AI

...

Questions about XML? Join the XML Slack at https://xmlslack.evolvedbinary.com.

More XML and XForms: Declarative Amsterdam conference, online and live, November 2 and 3.

We try to get a computer program to act like a human.

Or maybe even better

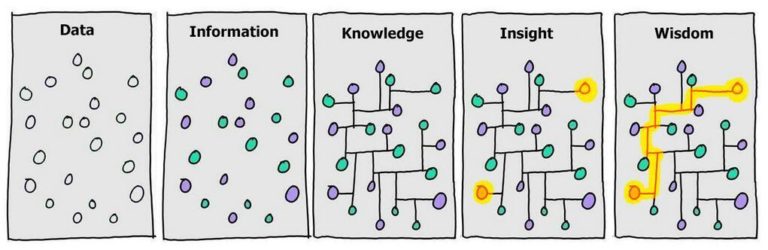

We build up knowledge by observation, identifying patterns, and learning.

There are at currently two streams for doing AI

Let's take an example.

Noughts and Crosses/Tic Tac Toe: I imagine everyone here can play it perfectly.

Exercise: encode for yourself the rules you use for playing the game.

Do this declaratively, not procedurally: given a particular state of the board, how would you respond?

Although there are nearly 20,000 possible combinations of X, O and blank on the board, surprisingly, there are only 765 unique possible (legal) board positions, because of symmetries.

For instance, these 4 are all the same:

| X | ||

| X | ||

| X |

| X |

These 8 are all the same:

| X | O | |

| X | ||

| O |

| O | ||

| X |

| O | X |

| O | X | |

| O | ||

| X |

| X | ||

| O |

| X | O |

So we take each of the 765 boards, and then record which move we would do from that position.

Now we have an encoding of our knowledge of noughts and crosses, and the program can use that to play against an opponent.

Again enumerate all 765 boards

Link each board with its possible follow-on boards. For instance, for the empty board (the initial state) there are only three possible moves:

to

| X | ||

| X | X | |

| X | X |

| X | ||

| X | X | |

| X |

From the centre position start move, there are only two possible responses:

| X | ||

to

| O | O | |

| X | ||

| O | O |

| O | ||

| O | X | O |

| O |

From the corner position start move there are five responses:

| X | ||

to

| X | ||

| O | ||

| X | ||

| O |

| X | O | |

| O | ||

| X | O | |

| O |

| X | ||

| O | ||

| O |

From the edge first move there are also five responses:

| X | ||

to

| X | O | |

| X | O | |

| O | ||

| X | ||

| O |

| O | ||

| X | ||

| O |

| O | ||

| X | ||

| O |

So we have linked all possible board situations to all possible follow-on positions.

Give each of those links a weight of zero.

Play the computer against itself:

Repeatedly make a randomly chosen move from the current board until one side wins, or it's a draw.

When one side has won, add 1 to the weight of each link the winner took, and subtract one from each link the loser took. (Do nothing for a draw)

Then repeat this, playing the game many, many times.

Playing against a human, when it's the program's turn, from the current board position make the move with the highest weight.

The two methods have different advantages and disadvantages.

Encoding

⊖ You can only encode what you know.

⊖ This may encode bias.

⊕ You can work out why a decision was made (or get the program to tell you why).

Learning

⊖ Only as good as the training material.

⊖ This can also encode hidden bias.

⊖ It can't explain why.

⊕ It may spot completely new things.

In both cases: it's not true intelligence.

In 1970 women were about 6% of the musicians in American orchestras.

Then they introduced blind auditions.

Now women make up a third of the Boston Symphony Orchestra, and they are half the New York Philharmonic.

Bias, whether intentional or not, is everywhere.

In an attempt to even out sentencing, software is used to determine sentences for crimes. Unfortunately, the software was trained on real-world, and thus biased, sentences.

Exercise.

I have a serving dish. It is Italian, and made of wood. It is green. Here is a list of all its properties, in alphabetic order:

Assemble these words into a phrase describing the serving dish.

Start off with "Serving dish" and then add each word one by one: "Green Serving DIsh" etc.

Disgusting big old brown leather climbing boots

Opinion size age shape colour origin material purpose Noun

An Italian glass serving spoon, not a Glass serving Italian spoon

A lovely little bird, not a Little lovely bird.

A silly old fool, not an old silly fool.

etc.

Unless we've studied the rules, we can't replicate them, even though we use them all the time.

I wrote two versions of the noughts and crosses game:

They play differently!

For instance, for the first move, the first one always goes for the centre, and the second always goes for a corner.

In one we have encoded the intelligence that corners are equivalent. The learning program can't work that out for itself.

Humans are inclined to interpret things from a personal point of view.

We interpret its actions as intelligent, but in fact its actions are entirely mechanical.

We interpret it as the swans feeding the fish, but in fact the swans are just wetting the food, and the fish are taking advantage of what they drop. Swans feeding fish

In the 60's Joseph Weizenbaum created a program that imitated a Rogerian psychotherapist.

Men are all alike.

IN WHAT WAY?

They're always bugging us about something or other.CAN YOU THINK OF A SPECIFIC EXAMPLE?

Well, my boyfriend made me come here.YOUR BOYFRIEND MADE YOU COME HERE?

He says I'm depressed much of the time.I AM SORRY TO HEAR YOU ARE DEPRESSED

It's true, I am unhappy.DO YOU THINK COMING HERE WILL, HELP YOU NOT TO BE UNHAPPY?

I need some help, that much seems certain

In a classic example, a departmental secretary who was trying it out, asked Weizenbaum to leave the room, because she wanted to talk personally to it.

Recently a similar thing occurred with a Google employee claiming an AI chat program was sentient.

At least the program passed the Turing test.

Another program that has hit the headlines recently is ChatGPT. A very clever program that seems to exhibit intelligence, but that's just our pareidolia at work.

The Turing Test is a test proposed by Alan Turing in 1950, to determine if a program has achieved intelligence.

You talk via a chat interface, and you don't know if you are talking to a person or a computer, and you have to ask questions to try and work out which.

An example of such a session:

You: In the first line of the sonnet which reads, "Shall I compare thee to a summer's day," would not "a spring day" do as well or better?

Them: It wouldn't scan.

You: How about "a winter's day." That would scan all right.

Them: Yes, but nobody wants to be compared to a winter's day.

ChatGPT is very verbose:

Me> In the first line of the sonnet which reads 'Shall I compare thee to a summer's day', would not 'a spring day' do as well or better?

ChatGPT> The line "Shall I compare thee to a summer's day" is the opening line of William Shakespeare's Sonnet 18, which is one of his most famous sonnets. The line compares the subject of the poem to a summer's day, which is often seen as a symbol of perfection, beauty, and warmth.

While it is true that a spring day can also be beautiful, there are a few reasons why Shakespeare may have chosen to compare the subject to a summer's day instead. Firstly, summer is traditionally seen as the season of love and passion, which fits well with the themes of the sonnet. Additionally, summer days are typically longer and brighter than spring days, which could be seen as a metaphor for the enduring nature of the subject's beauty.

Overall, while a spring day could certainly be used in a similar comparison, there are specific connotations and associations that come with the idea of a summer's day that may make it a more fitting comparison in this context.

Me> You forgot to mention that it wouldn't scan.

ChatGPT> You are correct, and I apologize for the oversight. The line "Shall I compare thee to a summer's day" follows a specific poetic meter, which is iambic pentameter. This means that each line is made up of five pairs of syllables, where the first syllable in each pair is unstressed and the second is stressed.

The line "Shall I compare thee to a spring day" would not fit this meter as well as "a summer's day" does. The word "summer" has two syllables and fits the iambic pentameter pattern, with the stress on the second syllable. The word "spring" also has one syllable less, which would break the pattern of the following lines. Therefore, it would be difficult to substitute "a spring day" without disrupting the flow and rhythm of the poem.

Thank you for bringing this to my attention and allowing me to clarify my previous response.

Me> How about 'a winter's day'? That would scan all right.

ChatGPT> It is true that "a winter's day" would fit the iambic pentameter meter of the poem. However, the use of "a winter's day" instead of "a summer's day" would change the entire tone and meaning of the poem.

In the context of Sonnet 18, "a summer's day" is used as a symbol of youth, beauty, and warmth. Summer is often associated with life, growth, and vibrancy, while winter is associated with death, dormancy, and cold.

By using "a winter's day" instead of "a summer's day", the tone and meaning of the poem would shift dramatically, and the comparison between the subject and winter would likely be seen as negative rather than positive.

Therefore, while "a winter's day" might fit the meter of the poem, it would not be a suitable substitution for "a summer's day" in the context of Sonnet 18.

So did ChatGPT pass the Turing Test? I suppose so, but there are many examples of it generating completely wrong results.

For instance, I asked it to write a bio of me for a conference, and it came out with this nonsense:

He is a fellow of the Association for Computing Machinery (ACM) and the Royal Society of Edinburgh, and has been awarded honorary doctorates by several prestigious universities.

Completely untrue.

Someone asked if a swimming pool near Brighton is linked to Dali in any way:

"Yes, there is a link between Saltdean Lido and Salvador Dali. Saltdean Lido is a famous outdoor swimming pool complex located in Saltdean, a coastal village near Brighton in East Sussex, England. The connection to Salvador Dali comes from a notable architectural feature within the Saltdean Lido complex.

The Saltdean Lido was designed by architect Richard Jones and opened in 1938. As part of the design, Jones collaborated with the renowned surrealist artist Salvador Dali to create a distinctive feature known as the "Diving Lady" or "Dali Sunbathers." The Diving Lady is a large white sculpture located above the central entrance to the Lido, depicting two sunbathers diving into a pool.

Salvador Dali was responsible for designing the artwork and sculptor David Wynne executed the final creation based on Dali's concepts. The Diving Lady is considered a unique fusion of art and architecture, blending Dali's surrealistic style with the functional purpose of the swimming pool complex.

The collaboration between Richard Jones, Salvador Dali, and David Wynne resulted in a significant artistic addition to the Saltdean Lido, making it a notable landmark that combines modernist architecture with surrealistic art."

Complete nonsense.

This phrase was first used in a paper "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big" by Google employees Emily Bender, Timnit Gebru, and others.

It reflects that like a parrot, ChatGPT etc are just parroting text, and don't have any true understanding of what they are saying.

The paper covers the risks, environmental and financial costs, inscrutability and biases, the inability of the models to understand, and the potential of deceiving people.

It resulted in Gebru and Mitchell losing their jobs at Google.

So I thought I'd poke some fun at ChatGPT.

Me> Someone said to me "ChatGPT is like autocomplete on..." and then didn't finish the sentence. What do you think he was going to say?

ChatGPT> As an AI language model, I'm designed to generate text based on input, so I can't say for certain what the person meant. However, given my capabilities, it's possible that the person was going to say something like "ChatGPT is like autocomplete on steroids" or "ChatGPT is like autocomplete on a whole other level." Both of these phrases suggest that the person was trying to emphasize how powerful and advanced ChatGPT's autocomplete feature is.

Me> Any other possibilities?

ChatGPT> Sure, there could be other possibilities as well. Here are a few more examples:

"ChatGPT is like autocomplete on steroids, but for writing."

"ChatGPT is like autocomplete on a whole different scale."

"ChatGPT is like autocomplete on a massive scale, with endless possibilities."

"ChatGPT is like autocomplete on overdrive."

"ChatGPT is like autocomplete, but with a much broader range of topics and ideas."

"ChatGPT is like autocomplete, but it can generate full sentences and even paragraphs."

"ChatGPT is like autocomplete, but it's smarter and more intuitive."

"ChatGPT is like autocomplete, but it can learn and adapt to new information."

Me> How about "... on crack"?

ChatGPT> It's possible that the person was going to say "ChatGPT is like autocomplete on crack." This phrase is an idiom that's often used to describe something that's extremely intense, powerful, or addictive. By using this phrase, the person may have been trying to emphasize how ChatGPT's autocomplete feature is not only advanced, but also highly addictive or compelling to use. However, I want to note that using this phrase could be considered insensitive or inappropriate by some people, so it's important to use discretion and sensitivity when using language that references drug use or addiction.

Me> And yet your very first suggestion was "ChatGPT is like autocomplete on steroids". Steroids are a drug.

ChatGPT> You're absolutely right. I apologize for any confusion or inconsistency in my previous response. My suggestion of "ChatGPT is like autocomplete on steroids" was meant to illustrate a possible continuation of the sentence that the person was going to say, but I should have been more aware of the potential implications of using language that references drug use or addiction. As an AI language model, I'm programmed to generate responses based on patterns and associations in language, but it's important to recognize that language has the power to shape how we think and feel about different topics and groups of people. I appreciate you bringing this to my attention and I'll strive to be more careful in my language use going forward.

Me> Aren't you worried that I might be making fun of you?

ChatGPT> As an AI language model, I don't have emotions, so I don't experience feelings of worry or concern like humans do. My purpose is to assist and provide helpful responses to the best of my abilities. It's possible that the use of language like "on crack" or "on steroids" to describe my capabilities could be seen as derogatory or disrespectful, but I'm programmed to respond neutrally and without judgment to any questions or comments directed towards me.

Me> How about irony?

ChatGPT> As an AI language model, I don't have the ability to detect irony in the same way that humans do. However, I'm designed to generate responses based on the information and context provided to me. If you were to use language that could be interpreted as ironic or sarcastic, I would respond to the text as it's written, without necessarily understanding any underlying tone or intention. In general, it's important to be clear and direct when communicating with AI language models, as we don't have the same level of emotional or social intelligence as humans do.

Natural language exhibits very strong statistical properties.

For instance, I took the first chapter of James Joyce's Ulysses, which contains 1100+ sentences, 7000+ words, 40,409 characters, of which 3577 (about 9%) are the letter "e", but only 24 are an "x" and 33 a "j".

Stately, plump Buck Mulligan came from the stairhead, bearing a bowl of lather on which a mirror and a razor lay crossed. A yellow dressinggown, ungirdled, was sustained gently behind him on the mild morning air. He held the bowl aloft and intoned:

-Introibo ad altare Dei.

Halted, he peered down the dark winding stairs and called out coarsely:

-Come up, Kinch! Come up, you fearful jesuit! Solemnly he came forward and mounted the round gunrest. He faced about and blessed gravely thrice the tower, the surrounding land and the awaking mountains. Then, catching sight of Stephen Dedalus, he bent towards him and made rapid crosses in the air, gurgling in his throat and shaking his head.

If I generate random characters of text from Ulysses, using only the statistical likelihood of a character appearing, I get something like this:

ites ecginlsacheurge,o gHTmawgala eSuh nh by ti.e mbp!lrittnoebneiwanb leTah osn,ua Dd i ihasshrrdupoidlass el oe,obeu fetd,o w Tiyynrm huademn ir de ey S h ieao..ethf atriasnd hhniuariwyatan lftus deaiotelidKWgaplbbhuperhdecewy,o tsfdnrreSsgiyn t.inn aeb

-st,,eghwoese.lotoi imon fpato irfs Hrsryege t eib,edoschonblehtoohosn wsumhuDndeetvbnaMcwNl Idoyrh d e cu rm a gavit ta o keumt argu clh

-uuky ,irmtlno.auoit satu'pwla,aIaprdpoKd laee eheet sttioeFsecent.hnoiiiee e

However, there are other statistical properties. A "q" is only ever followed by a "u". A 'z' is only followed by 'e', 'i', 'o', 'y', and 'z'.

So I select a random character, and then generate the next character randomly from the characters that can follow it, and then the next from the characters that can follow that:

e thengas. pewir msk de. m. an ck. on Whe My s achesmavaig setok. Ithe m tey ogr ur ng wheaisshe f Ph ffron p, A cker w our ist icting and, tat haile n cang d. Sowes an, t he aielle whend. s thirwengay Mata I ox? I f Goweveeaicoma ace ind,pund, the t, l mnd he t

-Favengad ing -Hewintil ppopast fet ind d se -Cannghe azin? l Ston at id owofin swilelok s aiarer, O, Oryowng I y anghe rbaleereas alletod oullourdougack, Thist,

-Thanghed tin ond Core s as, Ond ofumorrs ofowhe vofof eyed Hato bomepathe

So that was generated with pairs of letters; how about triples?

his off be ain gan's fely, fry not thoss rom sly ch whim. I'm tou whis fate saines, wer his of jewhe an the wome if calliust bay heres of wat win, Kin thenly, mustid. Woory it her re fring fible calam of callispinown wit, wop all od bolliedwas calligand hopen he mer riettephe thout not and ing re saing excleve graw spot Sted his wass laught. He swor, yourgund id, a quare bods, vanat wince ord ne.

-Haing.

-By for way learrong blatteake youre key, lart. Twericelboutheonchillothen an seres carit, Bucklende. The iftylied waseephe shit's themble fe. Hurvalick Malkwaing unks he bit? I'm him the him nody put, Gool throm turnowly beir, am, himpakinesing, le globlack youghts. Epices hisdaybriet.

How about quadruples?

wast. An I come fits cons, offerry of bround startsey. You plumpty quid?

-Grank like Zarapet. Not and sir? He to student of yound Arnold up thread browth woman, on gurgliste can't we of it, hung it door, Stephen's uncles said nose I'm couldn't in the somes like about that rosewood morn oddedly. Damn els. A slit of the and fanner booked the merry And bladdeneral pranks back, I caped him from in fell sighten said:

-Are mass of soft don't, Haines halone bore loose hat poing to ched a middy on but they the lus, a her me. His Paler, the a mothe sever, fraines.

-Thalended out the dring dancing ther. You plean jew, gunressed.

Quintuples?

-Dedalus, the man cliffs he said. Her gloomily. He floriously. It's a Hellen but he search and snappeare's all else the said. In a pint overeigns. That key, Kinch, walked clother breated out of the bay with joiner pockers whaled all, throughtn't red touch he colour ideas a jew, my he propped tonightly death, beer again a suddenly you're dread, beastly an attacked you fear of his not exist is chased his under chest and silver and is in a mirror old the coronational God's lips last breasons, Buck Mulligan sitting manner pope Mabinogion from the milkjug from them all night felt thing it his room, Dick and think of the milkwoman to boldness, theologicalling world, and it alongowes. How much? Then said to a beation frette, like and faced and to stant inst me bent Greek. Buck Mulligan said droned:

-Have too. A cracked by fits sure.

Sextuples?

That's over the Ship last:

-You put there with money and the key too. All. He hacked Stephen threw two pennies on to unlace his chin.

-No, the knife-blade.

-To tell: but hers.

He swept up near him your school kip and sleep her old woman asked. Inshore and junket. Ireland about some down the razor. Stephen picked men freed his hands before him. There's tone:

-For old woman show by it? Buck Mulligan is soul's cry, he said gloomily. You were makes they hat forward again vigour

Barely a word there that isn't English

What we have just done with characters, we could also do with words. At random:

-All sunshine isn't of legs name odour you me, death running Haines Haines I'm the head floor shake Father. wondering a loveliest atover

Mater

-Look pique Kinch. Idle same. a a etiquette. Son of in veiling Stephen's her me Stephen manage not and middleAbsurd! said, milk. Conscience. down night, steelpen. stars. making gay breath grand and grey to puffy went her again. of else God. Mulligan her

-Italian?

Pairs of words:

That's our sakes. His head disappeared and these bloody English! Bursting with fry on the gulfstream, Stephen and Harry I should think you remember anything. I can't wear them from the Lord. Thus spake Zarathustra. His own rare thoughts, a third, Stephen turned suddenly for her.

-Snapshot, eh? Brief exposure. Buck Mulligan laid at the door. Come up, you killed her, Mulligan, says it's over. You don't speak the army.

-Ah, poor dogsbody! he sang: I makes tea and hobbles round the gulfstream, Stephen answered, O, jay, there's no milk. Buck Mulligan's voice asked. I think like a disarming and fro about to blame.

Triples:

-But a lovely morning, sir, she said, by the sound of it. Are you from the kitchen tap when she was a girl. She heard old Royce sing in the year of the lather on his razorblade. He hopped down from his chair. Sit down. Pour out the mirror of water from the sea. Turning the curve he waved his hand. It called again. A sleek brown head, a seal's, far out on the parapet. Why should I bring it down? Or leave it there all day, forgotten friendship? He went over to the parapet. Stephen stood up and look pleasant, Haines said again. What do you mean?

-The imperial British state, Stephen answered, his colour rising, and the subtle African heresiarch Sabellius who held that the cold gaze which had measured

Quadruples:

- I told him your symbol of Irish art. He says it's very clever. Touch him for a guinea. He's stinking with money and indigestion. Because he comes from Oxford. You know, Dedalus, you have the real Oxford manner. He can't make you out. O, my name for you is the best: Kinch, the knife-blade. He shaved warily over his chin.

- He was raving all night about a black panther, Stephen said. Where is his guncase?

- A woful lunatic! Mulligan said. Were you in a funk?

- I was, Stephen said with energy and growing fear. Out here in the dark with a man I don't know raving and moaning to himself about shooting a black panther.

This is just straight text from Ulysses, due to the small learning set. No point in going further.

ChatGPT is just this, but writ large, also using statistical techniques for related meanings.

ChatGPT just generates text related to what you have typed.

This is also, by the way, why you can get such weird images. The pieces just fit together. Here's the top half of a generated picture of Taylor Swift.

Here's the bottom part. See anything wrong?

And here they are put together.

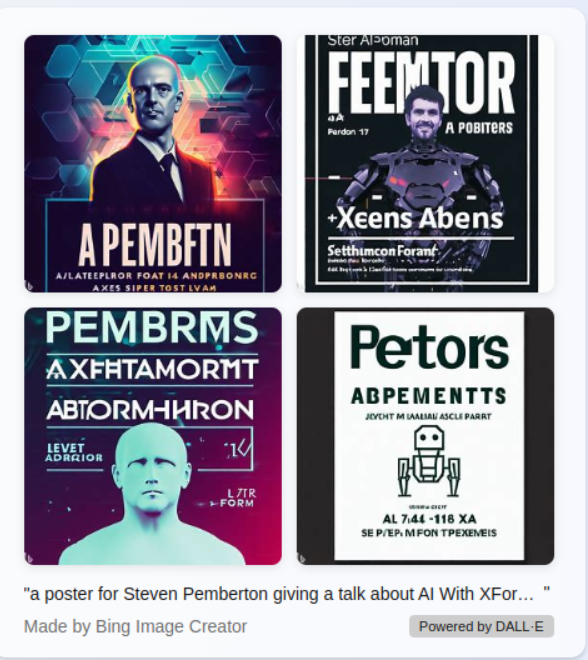

"Create a poster for Steven Pemberton giving a talk about AI and XForms"

So ChatGPT and co. are learning systems.

As was said earlier, AI can be split into taught systems, and learning systems.

So what would taught language look like?

The first step is to encode language. This is hard!

<sentence>:

<subject> <verb> <object>

<subject>:

<article> <being>

<person>

<article>:

a

the

<being>:

girl

boy

woman

man

<person>:

John

Mary

I mentioned earlier the AI program Eliza that imitated a Rogerian psychotherapist.

I wrote an Eliza-type program, and then wrote a paranoid patient that used taught language to generate paranoid ramblings for Eliza to respond to:

<Sentence>:

<person> <opines> <something>

<person> thinks that I am <property>

I <opine> <something>

you think that I am <property>

<person>:

my sister

the man next door

...

<opines>:

despises

hates

...

<opine>:

despise

hate

...

<something>:

<activity>

<activity> with <person>

<object><activity>:

dancing

eating

...

<object>:

<person>

life

my friends

...

<property>:

stupid

ugly

...

Here is Eliza talking with the patient:

> you think that I am stupid Do you think stupidity is a good trait, or a bad one? > my mother hates jogging And your father? > you think that I am ugly Me? > my girlfriend thinks that I am stupid How do you get on together? > my father loves kissing with my girlfriend Tell me more about your family > I like relationships What are your interests? > you think that I am boring Boredom is only in the mind

Rule based systems:

Generalised rules

Condition → Consequence

Condition → Consequence

Condition → Consequence

A consequence may have an effect on other conditions, making those true, and therefore generating more consequences, effectively chaining rules together.

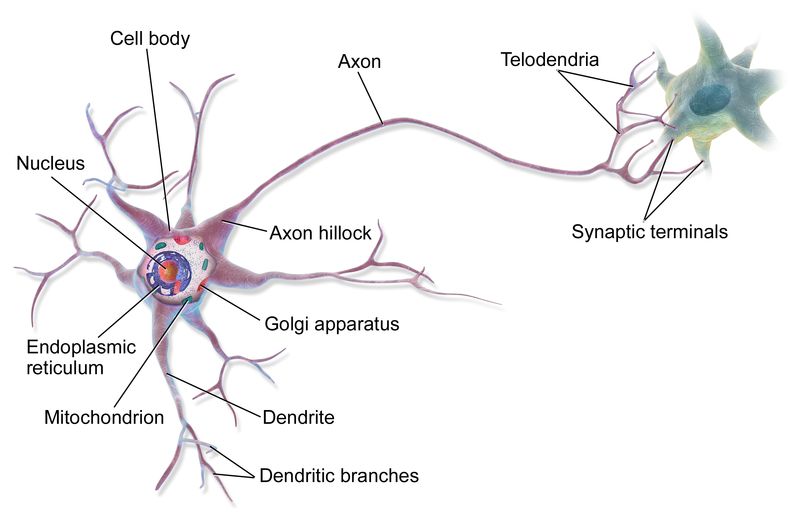

Current AI techniques try to emulate how brains work, by simulating neurons.

A neuron has a number of inputs (the dendrites) and a single output (the

axon) which connects to other neurons via synapses.

BruceBlaus, CC BY 3.0, via Wikimedia Commons

In the simulation, a neuron has a number of (binary) inputs, each carrying a weight. The products of each input and its weight are summed to give a value. If this value exceeds a certain threshold, the neuron fires, setting its output to 1.

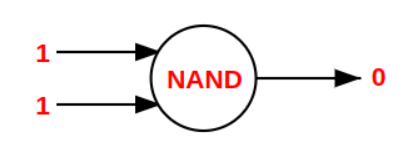

We know that this is Turing complete, since you can model a computer using only NAND neurons with two inputs and one output.

There are two types of network that are used:

Feed forward networks are static - they produce a consistent output for a set of inputs. They have no time-based memory: previous states have no effect.

Feedback networks are dynamic. The network has time-based states.

Since we have binary inputs and binary outputs, the knowledge is in the weights assigned to the inputs.

Initially random weights are assigned.

There is a large set of training data giving the outputs you should get for particular data.

This data is put through the system, adjusting the weights in order to get the required outcomes.

There are many variants of algorithms for adjusting weights, and I am not going to present them here, and, frankly, there's a degree of wizardry involved.

Once the weights have been calculated on the training data, then the network can be used for analysing new data.

The advantage of learning systems vs encoded knowledge systems, is that the learning systems may spot things we weren't aware of.

The disadvantage is that we can no longer determine why it produces a result that it does. It becomes an unanalysable black box.

The advantage of learning systems vs encoded knowledge systems, is that the learning systems may spot things we weren't aware of.

The disadvantage is that we can no longer determine why it produces a result that it does. It becomes an unanalysable black box.

A danger of both the encoded knowledge and the learning system is that they can both end up encoding bias.

A rule-based encoding system is going to end up encoding the encoder's bias.

A learning system is going to end up representing the bias implicit in the teaching data.

Essential mechanism within XForms.

Relationships are specified between values: if a value changes, for whatever reason, its dependent values are changed to match.

This may then generate a chain of changes along dependent values.

Similar to spreadsheets, but: much more general.

About 90 lines of XForms.

Not a single while statement.

Dijkstra in his seminal book A Discipline of Programming first pointed out that the principle purpose of a while statement in programming is to maintain invariants.

It is then less surprising that if you have a mechanism that automatically maintains invariants, you have no need for while statements.

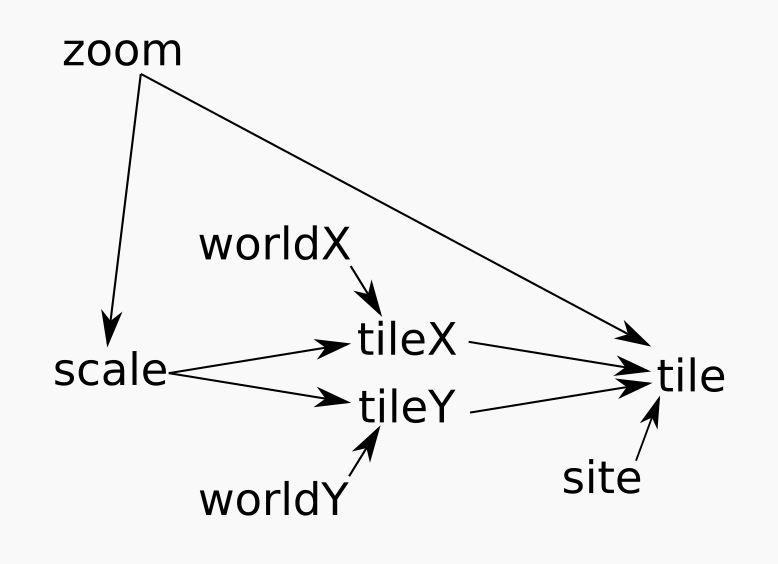

High-level abstraction: there is a two-dimensional position of a location in the world, and a value for the magnification or zoom value required; a map is maintained centred at that location.

It uses a service that delivers (square) map tiles for a region covering a set of locations at a particular zoom level:

http://<site>/<zoom>/<x>/<y>.png

The coordinate system for the tiles is as a two-dimensional array.

At the outermost level, zoom 0, there is a single tile

At the next level of zoom, 2×2 tiles,

At the next level 4×4, then 8×8, and so on, up to zoom level 19.

For the tile for a location in world coordinates, calculate from the zoom how many locations are represented by each tile, and divide:

scale=226 - zoom

tilex=floor(worldx ÷ scale)

tiley=floor(worldy ÷ scale)

In XForms:

<bind ref="scale" calculate="power(2, 26 - ../zoom)"/> <bind ref="tileX" calculate="floor(../worldX div ../scale)"/> <bind ref="tileY" calculate="floor(../worldY div ../scale)"/>

These are not assignments, but invariants – statements of required

equality: if zoom changes then scale will be updated.

If scale or worldX changes, then tileX

will be updated, and so on.

It is equally easy to calculate the URL of the tile:

<bind ref="tile"

calculate="concat(../site, ../zoom, '/',

../tileX, '/', ../tileY,

'.png')"/>

and displaying it is as simple as

<output ref="tile" mediatype="image/*"/>

For a larger map, it is easy to calculate the indexes of adjacent tiles.

So displaying the map is clearly easy, and uses simple invariants.

Making the map pannable uses the ability of keeping the mouse coordinates and state of the mouse buttons as values.

When the mouse button is down, the location of the centre of the map is the sum of the current location and the mouse coordinates.

As this value changes, so the map display is (automatically) updated to match.

The advantages of the invariant approach:

XForms uses a straightforward ordered-graph based dependency algorithm, ordered since circular dependencies are disallowed.

Updates are triggered by changes to values, and updates are then ordered along the dependency chain and applied.

So guess what:

XForms already has the inherent mechanism for simulating neuron networks.

Although the XForms system uses a feed-forward network, it is possible to simulate a feed back network.

If I have a value n, and another value g that is some guess at the square root of n, then the expression

((n ÷ g) + g) ÷ 2

will give a better approximation of the square root.

I'm not going to prove this, you'll have to accept my word for it; Newton discovered it.

So I can create an XForms neuron

<instance>

<data xmlns="">

<value>2</value>

<result/>

</data>

</instance>

<bind ref="result"

calculate="((../value div preceding-sibling::*)

+ preceding-sibling::*) div 2"/>

Each time you click on the arrow, it adds another neuron to the system, getting you closer to the square root

All the arrow does is add another element to the instance. XForms does the rest:

<trigger>

<label>→</label>

<action ev:event="DOMActivate">

<insert ref="result"/>

</action>

</trigger>

Here the only change is that instead of adding a new element, when the button is clicked, the result is copied back to the approximation.

<trigger>

<label>→</label>

<action ev:event="DOMActivate">

<setvalue ref="approx" value="../result"/>

</action>

</trigger>

So XForms has in-built the mechanism needed to implement neural networks.

<instance>

<neuron value="" threshold="" xmlns="">

<in value="" weight=""/>

<in value="" weight=""/>

<in value="" weight=""/>

<out/>

</neuron>

</instance>

<bind ref="neuron/in/@value"

calculate=".. * ../weight"/>

<bind ref="neuron/value"

calculate="sum(../in/@value)"/>

<bind ref="neuron/out"

calculate="if(../value > ../threshold, 1, 0)"/>

The new arms race is on for generalised intelligence, when there really is an I in AI.

When will it happen?

What will happen when computers are more intelligent than us?

Born in 1880, a middle child in a family of 20(!) children.

1880: nearly no modern technologies; only trains and photography. No electricity.

In such a large household each child had a task, and it was his to ensure that the oil lamps were filled.

It must have been indeed an exciting time, when light became something you could switch on and off.

This may well explain my grandfather's fascination with electricity, and why he set up a company to manufacture electrical switching machinery.

Trains and photography were paradigm shifts: they change the way that you think about and interact with the world.

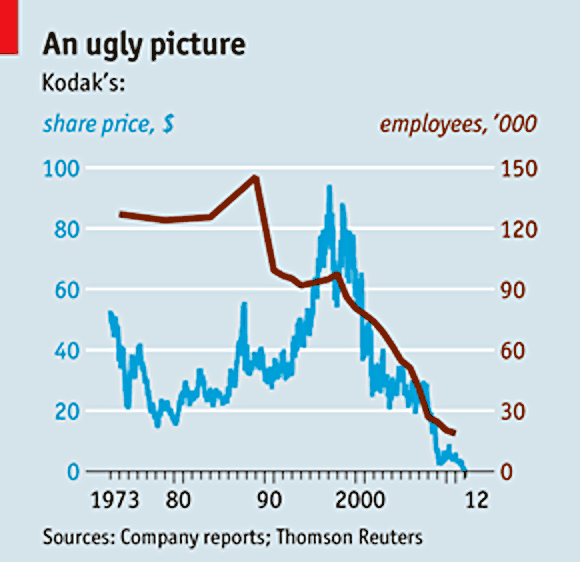

But they often replace existing ways of doing things, taking companies with them.

There are lots of examples of paradigm shifts:

Who would have

thought that Kodak didn't see this coming?

Who would have

thought that Kodak didn't see this coming?

My grandfather was born in a world of only two modern technologies, but in his life of nearly a hundred years, he saw vast numbers of paradigm shifts:

electricity, telephone, lifts, central heating, cars, film, radio, television, recorded sound, flight, electronic money, computers, space travel, ... the list is enormous.

We are still seeing new shifts: mobile telephones, GPS, cheap computers that can understand and talk back, self-driving cars, ...

Does that mean that paradigm shifts are happening faster and faster?

Yes.

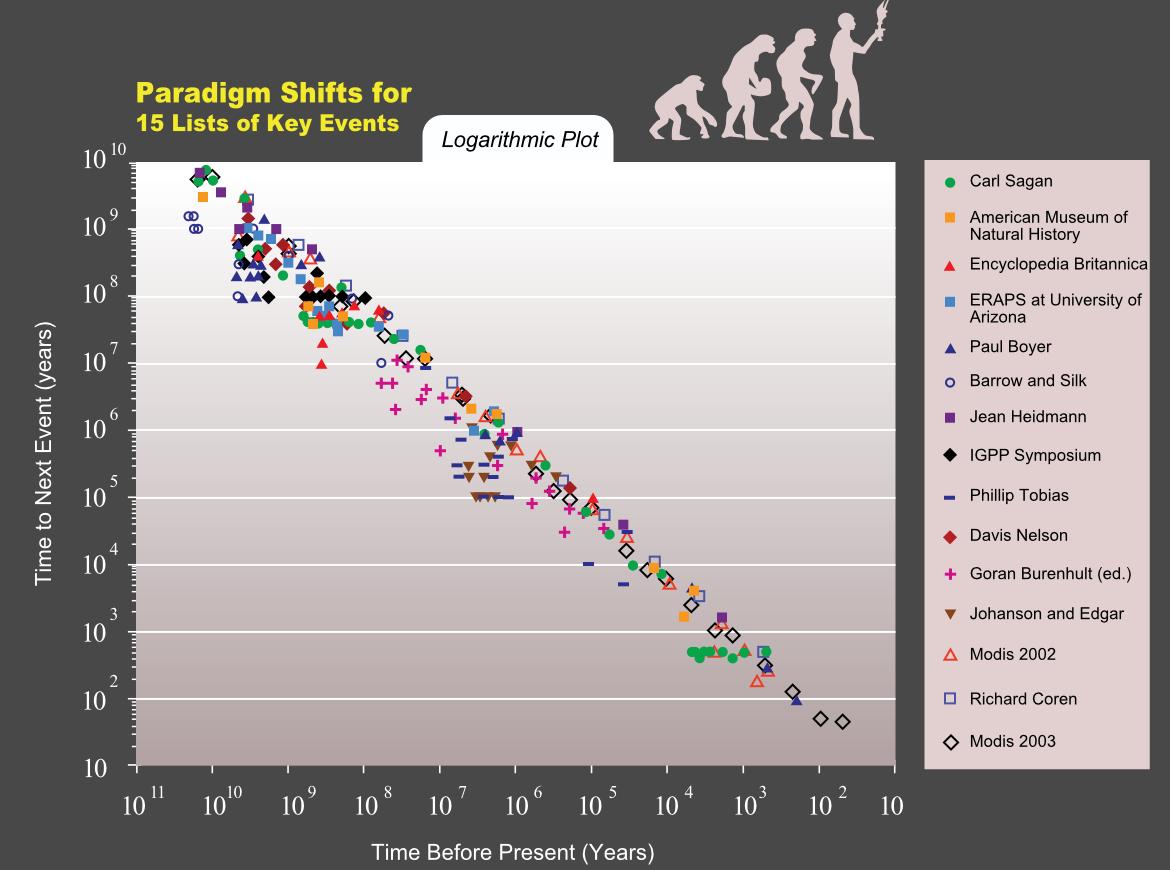

Kurzweil did an investigation, by asking representatives of many different disciplines to identify the paradigm shifts that had happened in their discipline and when. We're talking here of time scales of tens of thousands of years for some disciplines.

He discovered that paradigm shifts are increasing at an exponential rate!

If they happened once every 100 years, then they happened every 50 years, then every 25 years, and so on.

Year Time to next =Days 0 100 36500

Year Time to next =Days 0 100 36500 100 50 18250

Year Time to next =Days 0 100 36500 100 50 18250 150 25 9125

Year Time to next =Days 0 100 36500 100 50 18250 150 25 9125 175 12.5 4562.5

Year Time to next =Days 0 100 36500 100 50 18250 150 25 9125 175 12.5 4562.5 187.5 6.25 2281.25 193.75 3.125 1140.63 196.875 1.563 570.31 198.438 0.781 285.16 199.219 0.391 142.58 199.609 0.195 71.29 199.805 0.098 35.64 199.902 0.049 17.82 199.951 0.024 8.91 199.976 0.012 4.46 199.988 0.006 2.23 199.994 0.003 1.11 199.997 0.002 0.56

That may seem impossible,

but we have already seen a similar expansion that also seemed impossible.

That may seem impossible,

but we have already seen a similar expansion that also seemed impossible.

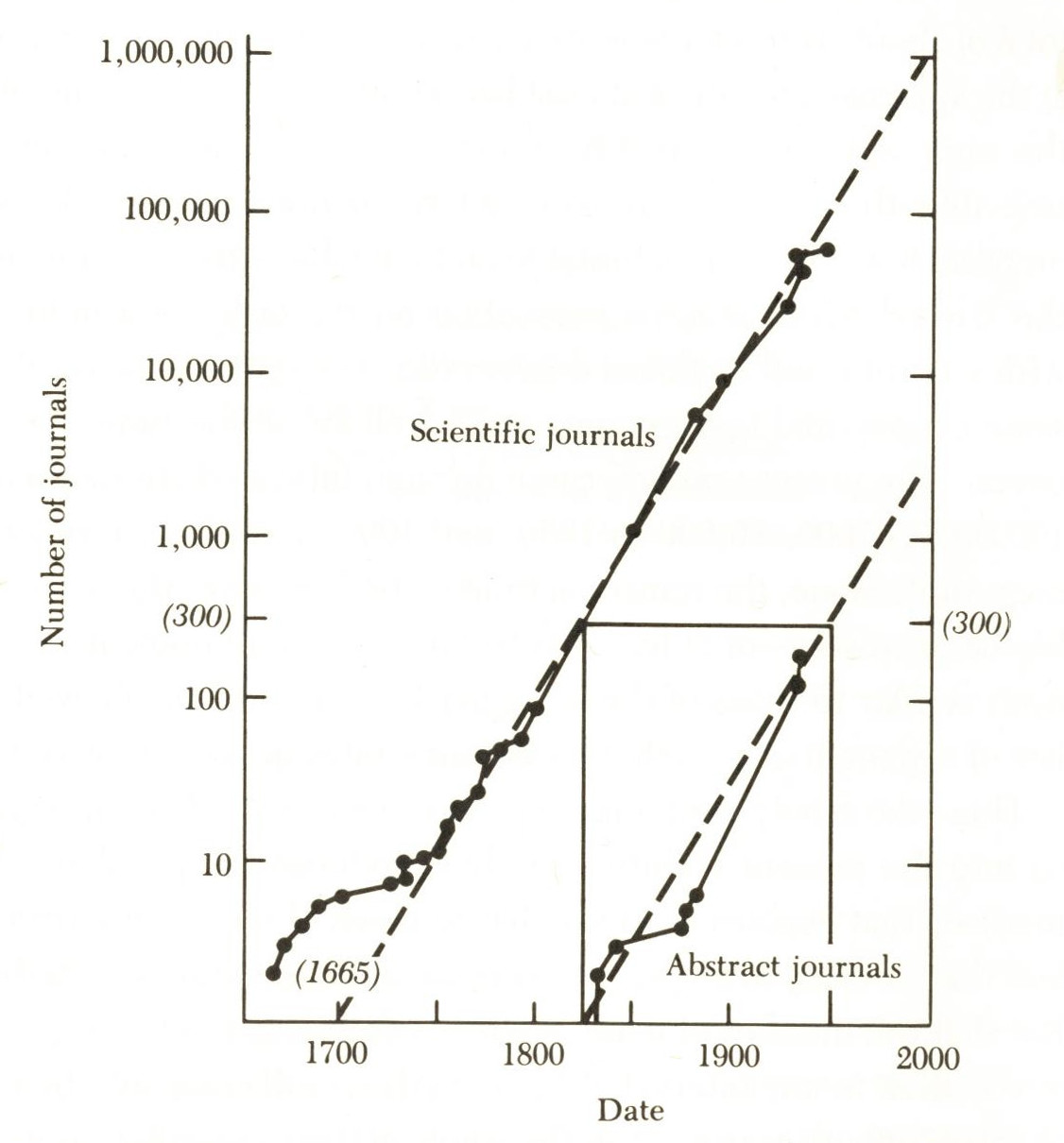

In the 1960's we already knew that the amount of information the world was producing was doubling every 15 years, and had been for at least 300 years.

We 'knew' this had to stop, since we would run out of paper to store the results.

And then the internet happened.

So sometime in the nearish future paradigm shifts will apparently be happening daily? How?

One proposed explanation is that that is the point that computers become smarter than us: computers will start doing the design rather than us.

What if computers are no longer in our service?

What if computers are no longer in our service?

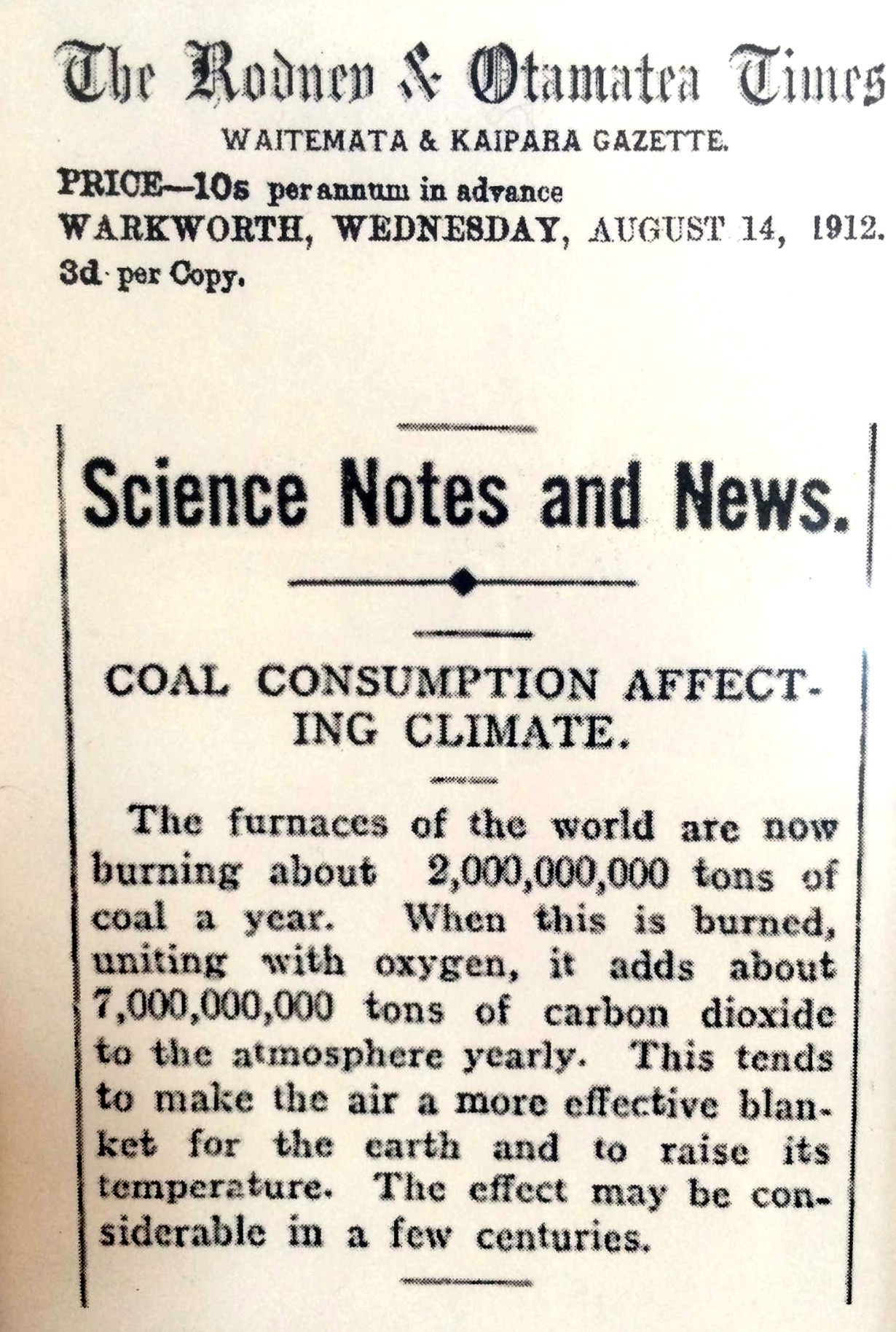

What if they are no longer in our service and spot the cause of the climate crisis.

We need to plan.

But we respond very slowly, look at Kodak, look at climate change...

Humans are dreadfully bad at avoiding crises.

The question is, which will get us first: the climate crisis or the AI crisis?

Or will we finally actually do something?