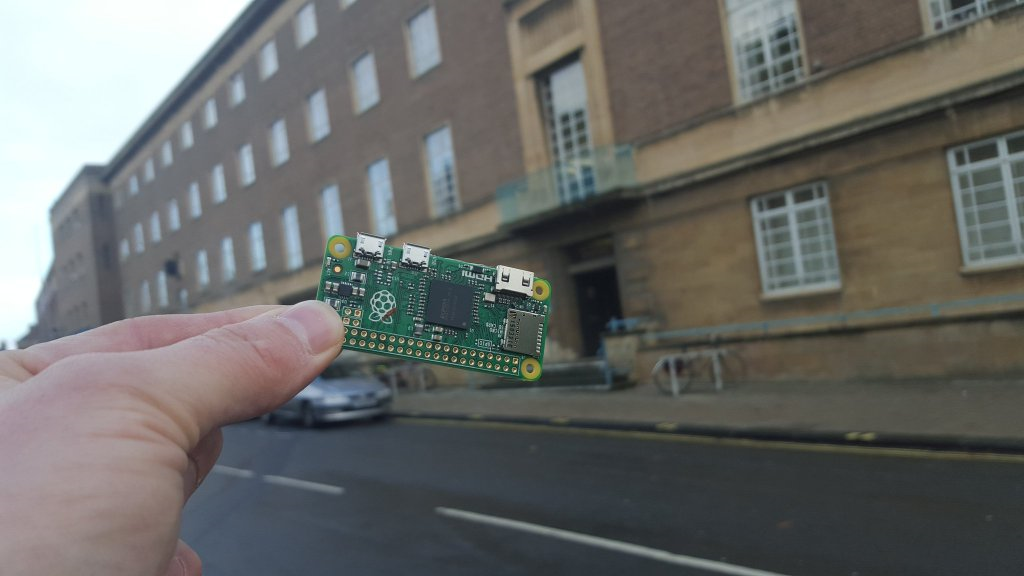

The first computer so cheap that they gave it away on the cover of a magazine

The Elliot ran for about a decade, 24 hours a day.

How long do you think it would take the Raspberry Pi Zero to duplicate that amount of computing?

The Elliot ran for about a decade, 24 hours a day.

How long do you think it would take the Raspberry Pi Zero to duplicate that amount of computing?

The Raspberry Pi is about one million times faster...

The Raspberry Pi is not only one million times faster. It is also one millionth the price.

A factor of a million million times better.

A terabyte is a million million bytes: nowadays we talk in terms of very large numbers.

Want to guess how long a million million seconds is?

The Raspberry Pi is not only one million times faster. It is also one millionth the price.

A factor of a million million times better.

A terabyte is a million million bytes: nowadays we talk in terms of very large numbers.

Want to guess how long a million million seconds is?

A really big number...

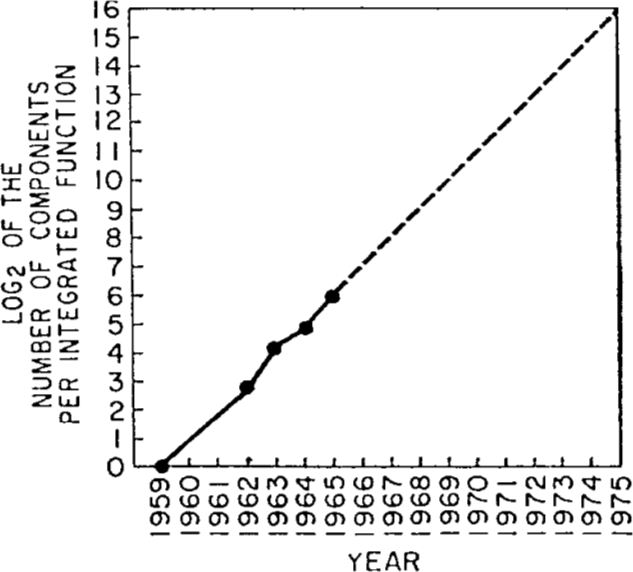

In fact a million million times improvement is about what you would expect from Moore's Law over 58 years.

Except: the Raspberry Pi is two million times smaller as well, so it is much better than even that.

In 1965 Gordon Moore proposed that the number of 'components' on a chip would double per year at constant price (and size).

In 1975, he adjusted it to 18 months.

In 2015 Moore's Law turned 50 years old.

Or less prosaically: Moore's Law was 33⅓ iterations of itself old.

The first time I head that Moore's Law was nearly at an end was in 1977. From no less than Grace Hopper, at Manchester University.

Since then I have heard many times that it was close to its end, or even has already ended.

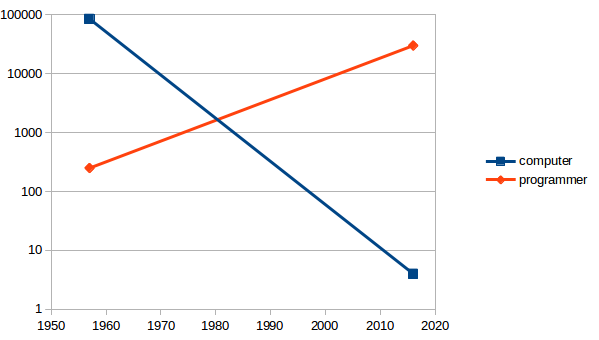

In the 50's, computers were so expensive that nearly no one bought them, nearly everyone leased them.

To rent time on a computer then would cost you of the order of $1000 per hour: several times the annual salary of a programmer!

When you leased a computer in those days, you would get programmers for free to go with it.

Compared to the cost of a computer, a programmer was almost free.

The first programming languages were designed in the 50s:

Cobol, Fortran, Algol, Lisp.

They were designed with that economic relationship of computer and programmer in mind.

It was much cheaper to let the programmer spend lots of time producing a program than to let the computer do some of the work for you.

Programming languages were designed so that you tell the computer exactly what to do, in its terms, not what you want to achieve in yours.

It happened slowly, almost unnoticed, but nowadays we have the exact opposite position:

Compared to the cost of a programmer, a computer is almost free.

I call this Moore's Switch.

Relative costs of computers and programmers, 1957-now

But, we are still programming in programming languages that are direct descendants of the languages designed in the 1950s!

We are still telling the computers what to do.

A new way of programming: declarative programming.

This describes what you want to achieve, but not how to achieve it.

A bit like how spreadsheets work, but much more general.

Let me give some examples.

Declarative approaches describe the solution space.

A classic example is when you learn in school that

The square root of a number is a number that multiplied by itself gives the original number.

This doesn't tell you how to calculate the square root; but no problem, because we have machines to do that for us.

function f a: {

x ← a

x' ← (a + 1) ÷ 2

eps ← 1.19209290e-07

while abs(x − x') > eps × x: {

x ← x'

x' ← ((a ÷ x') + x') ÷ 2

}

return x'

}

This is why documentation is so essential in procedural programming.

1000 lines, almost all of it administrative. Only 2 or 3 lines have anything to do with time.

And this was the smallest example I could find. The largest was more than 4000 lines.

type clock = (h, m, s) displayed as circled(combined(hhand; mhand; shand; decor)) shand = line(slength) rotated (s × 6) mhand = line(mlength) rotated (m × 6) hhand = line(hlength) rotated (h × 30 + m ÷ 2) decor = ... slength = ... ... clock c c.s = system:seconds mod 60 c.m = (system:seconds div 60) mod 60 c.h = (system:seconds div 3600) mod 24

A certain company makes one-off BIG machines (walk in): user interface is very demanding — traditionally needed:

5 years, 30 people.

With XForms this became:

1 year, 10 people.

Do the sums. Assume one person costs 100k a year. Then this has gone from a 15M cost to a 1M cost. They have saved 14 million! (And 4 years)

Manager: I want you to come back to me in 2 days with estimates of how long it will take your teams to make the application.

Manager: I want you to come back to me in 2 days with estimates of how long it will take your teams to make the application.

[Two days later]

Programmer: I'll need 30 days to work out how long it will take.

Manager: I want you to come back to me in 2 days with estimates of how long it will take your teams to make the application.

[Two days later]

Programmer: I'll need 30 days to work out how long it will take.

XFormser: It's already running!

The British National Health Service started a project for a health records system.

The British National Health Service started a project for a health records system.

One person then created a system using XForms.

For historical reasons, present programming languages are at the wrong level of abstraction: they don't describe the problem, but only one particular solution.

Declarative programming allows programmers to be at least ten times more productive: what you now write in a week, would take a morning; what now takes a month would take a couple of days.

Once project managers realise they can save 90% on programming costs, they will switch to declarative programming.

I believe that eventually everyone will program declaratively: fewer errors, more time, more productivity.