Information Theory 2015

News:

9 Dec 2015: added 2014 exam24 Nov 2015: updated Exercise #4

24 Oct 2015: updated course outline

25 September 2015: updated required previous knowledge

5 March 2015: created new page

27 Oct 2014: Good reasons to put away your mobile phone during class

Content of the course

Information theory was developed by Claude E. Shannon in the 1950s to investigate the fundamental limits on signal-processing operations such as compressing data and on reliably storing and communicating data. These tasks have turned out to be fundamental for all of computer science.In this course, we quickly review the basics of (discrete) probability theory and introduce concepts such as (conditional) Shannon entropy, mutual information and Renyi entropy. Then, we prove Shannon's theorems about data compression and channel coding. In the course, we also cover some aspects of information-theoretic security and show applications of information theory to the area of machine learning.

Requirements

Students are required to know the (theory) contents of the course Basic Probability, Computing and Statistics in the Master of Logic (no programming will be required for this course). Study the script and the theory homework exercises.Intended Learning Outcomes

At the end of the course, you will be able to solve problems of the following kinds:(Probability)

- compute the probability of an event using the most common discrete probability distributions (Bernoulli, binomial, and geometric);

- compute inverse probabilities using Bayes' rule;

- compute the means and variances of commonly used probability distributions;

- compute the means and variances of sums or products of a random variables with known distributions;

- bound the probability of an extreme event using inequalities such as the Markov bound, Chebyshev's inequality, or Hoeffding's inequality.

(Entropy and related concepts)

- compute the entropy of a random variable;

- compute the mutual information between two random variables;

- use entropy diagrams to reason about the relative size of the entropies, conditional entropies, and mutual information of two or three random variables;

- use Jensen's inequality to bound the mean of a random variable defined in terms of convex or concave function of another random variable.

(Data compression)

- construct a d-ary Huffman code for a random variable.

- use Kraft's inequality to check whether a prefix-free code can be constructed to fit certain codeword lengths;

- bound the possible rate of lossless compression of output from a given source using Shannon's source coding theorem;

- define a typical set and reason about its size, probability, and elements;

- compute the Shannon-Fano-Elias codeword for a sample from a stochastic process.

- compute the entropy rate of a Markov process.

(Noisy-channel coding)

- construct a probability model of a communication channel given a verbal description;

- compute the channel capacity of a channel;

- use Shannon's channel coding theorem to bound the achievable rate of reliable communication over a channel;

- use Bayes' rule to decode corrupted messages sent using an error-correcting code;

- evaluate the rate and reliability of such codes;

- define the jointly typical sets of a source and channel, and use such sets to decode outputs from the channel;

- draw the confusability graph of a given channel, and describe the channel depicted by a given confusability graph;

- compute the independence number and the zero-error capacity of a confusability graph;

Course website

Updated information about the course can be found on http://homepages.cwi.nl/~schaffne/courses/inftheory/2015/Study Material

The material will be presented in black-boards lectures. The following are good references:- [CF] Ronald Cramer, Serge Fehr: The Mathematical Theory of Information, and Applications, lecture notes, Version 2.0

- [CT] Thomas M. Cover, Joy A. Thomas. Elements of information theory, 2nd Edition. New York: Wiley-Interscience, 2006. ISBN 0-471-24195-4.

- [MacKay] David J. C. MacKay. Information Theory, Inference, and Learning Algorithms. Cambridge: Cambridge University Press, 2003. ISBN 0-521-64298-1

Lectures and Exercise sessions (2 x 45min each)

please check Datanose for the definite times and locations.Homework, exam, and grading

This is a 6 ECTS course, which comes to roughly 20 hours of work per week.There will be homework exercises every week to be handed in one week later. The answers should be in English. Feel free to use LaTeX, here is a template to get you started, but readable handwritten solutions are fine, too. Cooperation while solving the exercises is allowed and encouraged, but everyone has to hand in their own solution set in their own words.

There will be a final written exam. The exam is open-book, meaning that you can bring the study material [CF], [CT], [MacKay] mentioned above as well as any notes you made, but no electronic devices are allowed. Here is the written exam from Spring 2014.

The final grade for the course consists by 1/2 of the average homework grade (ignoring the worst grade) and 1/2 of the grade obtained at the final exam.

Course schedule Fall 2015

The following table provides an overview of the course contents as currently planned:

|

Day |

Contents |

[CF] |

[CT] |

[MacKay] |

Exercises |

|

|---|---|---|---|---|---|---|

|

1 |

Wed 28 Oct |

Introduction, Probability Theory, Entropy |

2.1 |

exercise sheet #1 | ||

|

Fri 30 Oct |

Preparation Homework: Understand Section 2.2 of [CF], solve Exercise 9 of Sheet #1 Jensen's inequality, properties of the entropy, mutual information and dependence Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Photo 5 Photo 6 Photo 7 Photo 8; |

3.1–3.3 |

2.1, 2.2, 2.6 |

|||

|

2 |

Wed 4 Nov |

Preparation Homework: Figure out how to construct a Huffman code Data Compression: symbol codes, Kraft's inequality, source-coding theorem (symbol-code version), Huffman codes |

5.1, 5.2 |

5 |

5, L4 |

exercise sheet #2 |

|

Fri 6 Nov |

Asymptotic Equipartition Theorem (AEP), the source coding theorem (asymptotic version) |

3 |

4.4 |

|||

|

3 |

Wed 11 Nov |

Preparation Homework: Play the online game of 20 questions d-ary Huffman codes, 20 questions, optimality and disadvantages of Huffman codes, codes as probability distributions, arithmetic codes Slides #5 Mathias's slides on arithmetic coding Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Photo 5 Photo 6 Photo 7 Photo 8 |

5.9, 13.3 |

6, L5 |

exercise sheet #3 | |

|

Fri 13 Nov |

Stochastic Processes, Entropy rates Slides #6 Mathias' notes on Random Processes and Ergodicity Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Photo 5 Photo 6 Photo 7 Photo 8 Photo 9 |

4 |

||||

|

4 |

Wed 18 Nov |

Ergodicity Slides Definitions, Facts, and Examples about Random Processes Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Photo 5 Photo 6 Photo 7 Photo 8 |

exercise sheet #4 (updated) | |||

|

Fri 21 Nov |

Entropy diagrams, Markov chains, data-processing inequality, perfectly secure encryption

Insecurity of Key Reuse in OTP Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Photo 5 Photo 6 Photo 7 Photo 8 Photo 9 Photo 10 Photo 11 Entropy rate of a Markov chain, with an example; The definition of ergodicity; a hint for exercise 5. |

3.3–3.4, 4 |

2.5, 2.8, 7.11 |

8 |

||

|

5 |

Wed 25 Nov |

Noisy channels, error-correcting codes, Hamming code Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Photo 5 Photo 6 Photo 7 Photo 8 |

7.11 |

1, L1 |

exercise sheet #5 | |

|

Fri 27 Nov |

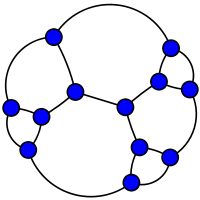

Preparation Homework: look up and remember the definitions of the following concepts: graph, vertex,

edge,

independent set, independence number. What is the independence number of Zero-error channel coding, Shannon capacity of a graph Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Exercise class: Comments on Ex. 1; Parity check graphs and edit costs; Hamming's error-detecting code, as a cube; with noise vector examples; Stirling's approximation, a graphical channel specification, and the relation between graphs and channels |

7.8 |

||||

|

6 |

Wed 2 Dec |

Fano's inequality, Channel capacity, Shannon's channel coding theorem: intuition and proof of converse |

2.10, 7.9–7.10 |

exercise sheet #6 | ||

|

Fri 4 Dec |

Shannon's channel coding theorem Blackboard Photo 1 Photo 2 Photo 3 Photo 4 Photo 5. First hint for exercise 2; second; the channel from exercise 6. |

7.1–7.7 |

9, 10 |

|||

|

7 |

Wed 9 Dec |

Source-channel separation, Quantum Information Theory Blackboard Photo 1 Photo 2 Photo 3 Ronald de Wolf's lecture notes on Quantum computing |

7.13 |

exercise sheet #7 (slightly updated) | ||

|

Fri 11 Dec |

Quantum Cryptography Serge Fehr's lecture notes on Quantum Cryptography Photos from the exercise class: The derivative of the binary entropy equals the binary logit function. Matrix notation for channels; an example channel: picture 1, picture 2, and picture 3. Strong products, and a part of the noisy 5-typewriter's confusability graph. Symmetry and concavity: explanation, example, and illustration. |

Life after "Information Theory"

If you got hooked on the world of entropies, you have several options after the course to pursue the topics of information theory and cryptography:- Talk to Christian about the possibilities of doing a semester project or master project in information theory or cryptography. He can also hook you up with other people at the ILLC, at CWI or in the rest of the world, working on different aspects of information theory.

- Follow Ronald de Wolf's course about combinatorics with computer science applications at the university of Amsterdam, starting Spring 2016.

- Follow Leen Torenvliet's course about Kolmogorov complexity at the university of Amsterdam, starting Spring 2016.

- Follow various online classes such as Raymond W. Yeung's Information Theory course, Dan Boneh's crypto, crypto II, Jon Katz's crypto class or Umesh Vazirani's course about quantum computing.